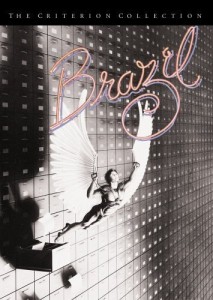

The New York Times reports a ridiculous case that was heard by the Supreme Court, which seems to come right out of the movie Brazil, in which a combination of events leads to a defendant in a capital murder case losing his rights to appeal in the state of Alabama. According to the article, a court had sent its ruling to two associates at a firm in New York who had work on behalf of defendant Cory Maples. The problems started when the associates left. The firm then returned the judgment to the court marked “returned to sender”. The court clerk received the envelope and did nothing. The local counsel of record also failed to follow up with the appeal. Eventually, the window that defendants have to file appeals elapsed, at which point the prosecutor seemingly gloated directly to Mr. Maples.

The New York Times reports a ridiculous case that was heard by the Supreme Court, which seems to come right out of the movie Brazil, in which a combination of events leads to a defendant in a capital murder case losing his rights to appeal in the state of Alabama. According to the article, a court had sent its ruling to two associates at a firm in New York who had work on behalf of defendant Cory Maples. The problems started when the associates left. The firm then returned the judgment to the court marked “returned to sender”. The court clerk received the envelope and did nothing. The local counsel of record also failed to follow up with the appeal. Eventually, the window that defendants have to file appeals elapsed, at which point the prosecutor seemingly gloated directly to Mr. Maples.

The only good news in this case is that the Supreme Court is now hearing it, and at least in oral arguments they seem to have been as incensed at the callous treatment of a defendant as one would hope they should be.

So now my questions, and I have many:

- Why is it that this case had to get to the Supreme Court in the first place? Is administrative incompetence grounds for rushing to kill someone?

- Does the current state of law and societal view towards prosecutorial discretion need correction? Here, in a case where the prosecutor clearly could have weighed in to prevent a travesty, he instead seemingly chose to gloat. Doesn’t that argue for stronger judicial oversight?

- Should there be sanctions against the local lawyer who failed to at all follow up in a death penalty case?

- In this case, how broadly should the Court rule? They could simply state that the confluence of events led to a perverse situation that requires redress, and narrowly rule, or they could require that states shoulder at least some burden to see that defendants are receiving fair treatment. What would that look like?

- If this is what happens in death penalty cases, what sort of miscarriages of justice are taking place in other cases, and how do we know?

What do you think?

Today’s

Today’s