Some of the brightest and most renown people don’t lend their views to a sentence, one clear example of this being Internet Founding Father, Vint Cerf. Nevertheless.

Postel’s Law on Interoperability

In general, an implementation should be conservative in its sending behavior, and liberal in its receiving behavior.

— Jon Postel in RFC 760

Back when the Internet was small and called the ARPANET, getting two computers to talk to each other was very difficult. The specifications were young and immature, leading to variances in implementations. A simple example might be a text-based protocol like SMTP, where one implementation ended a line with just a linefeed (LF) where the specification calls for carriage return and then linefeed (CRLF). Jon Postel addressed those variances in two complimentary ways: don’t cause them, and if you see one, don’t just cough up blood and quit. When specifications mature, it’s important to not be so lenient. For one, as they mature, the presumption is that more people, good and bad, are using the services they describe, and there will be those who abuse them. This happened with SMTP and EMail, in particular. The EMail architecture of the Internet is quite open: you don’t need permission to send me mail.

Back when the Internet was small and called the ARPANET, getting two computers to talk to each other was very difficult. The specifications were young and immature, leading to variances in implementations. A simple example might be a text-based protocol like SMTP, where one implementation ended a line with just a linefeed (LF) where the specification calls for carriage return and then linefeed (CRLF). Jon Postel addressed those variances in two complimentary ways: don’t cause them, and if you see one, don’t just cough up blood and quit. When specifications mature, it’s important to not be so lenient. For one, as they mature, the presumption is that more people, good and bad, are using the services they describe, and there will be those who abuse them. This happened with SMTP and EMail, in particular. The EMail architecture of the Internet is quite open: you don’t need permission to send me mail.

The Haas Warning

The reason one sometimes has to be liberal in what one accepts is sometimes due to a lack of clarity in the specification itself. This led to Jeff Haas’ quip:

The reason one sometimes has to be liberal in what one accepts is sometimes due to a lack of clarity in the specification itself. This led to Jeff Haas’ quip:

Of such “implied clarity” are many interop bugs made

The Tao of the IETF, as articulated by Dave Clark

We reject kings, presidents and voting. We believe in rough consensus and running code.

Over the years there have been a lot of bright ideas brought to standards bodies that have failed.  Many of those bright ideas never saw a single implementation, or even a line of code. We call such failures thought experiments. There is nothing wrong with doing a science experiment, but it should not be standardized. In fact you don’t need a standard unless you want two or more implementations to be interoperable. The best way to avoid this problem is as follows:

Many of those bright ideas never saw a single implementation, or even a line of code. We call such failures thought experiments. There is nothing wrong with doing a science experiment, but it should not be standardized. In fact you don’t need a standard unless you want two or more implementations to be interoperable. The best way to avoid this problem is as follows:

- There should be general agreement that there is a real clearly defined problem

- Have running code to demonstrate a potential solution

- Have broad agreement – or rough consensus of the technical community – on that solution.

The first point helps us focus on what is important. The second point focuses us on what is practicable. The third point finds the path to agreement as to actual protocol behavior.

The Knowles Doctrine

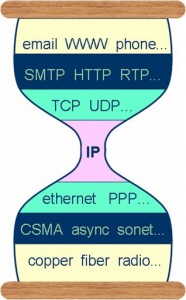

IP on everything!

While Vint Cerf made the saying popular in the late nineties (in fact he did a striptease at one meeting, ending up with a tee-shirt and shorts, with the saying on it), I first heard this out of the lips of Stev Knowles. In the earlier days of the IETF we had some rough edges, but the points made even with those rough edges were very important. The layer model was such that there really was nothing IP couldn’t run over. Today, IP runs atop Ethernet, MPLS, Fiber, 3G, T1, SDLC, WiFi, numerous cable interfaces, ATM and ADSL, and many other things. David Waitzman made this point through a brilliant April Fools RFC 1149, IP over Avian Carrier. Stev put it quite simply, and the double entendre of the homonym was quite intentional.

While Vint Cerf made the saying popular in the late nineties (in fact he did a striptease at one meeting, ending up with a tee-shirt and shorts, with the saying on it), I first heard this out of the lips of Stev Knowles. In the earlier days of the IETF we had some rough edges, but the points made even with those rough edges were very important. The layer model was such that there really was nothing IP couldn’t run over. Today, IP runs atop Ethernet, MPLS, Fiber, 3G, T1, SDLC, WiFi, numerous cable interfaces, ATM and ADSL, and many other things. David Waitzman made this point through a brilliant April Fools RFC 1149, IP over Avian Carrier. Stev put it quite simply, and the double entendre of the homonym was quite intentional.

IP is the only common protocol above which and below which all other protocols sit. And while Steve Deering doesn’t lend himself well to a good quip, he was responsible for perhaps the best illustration of how this all works. This hourglass model was at first taken as an axiom or religious statement. Back in the earlier days there was no single protocol in the middle. Instead, other protocols such as DECNET, Appletalk, and SNA also vied for the spot, and at times were more popular in enterprise deployments. Now, not only does IP sit in the middle alone, but there is research from the Georgia Institute of Technology that claims that only a single protocol CAN sit in the middle at this point.

IP is the only common protocol above which and below which all other protocols sit. And while Steve Deering doesn’t lend himself well to a good quip, he was responsible for perhaps the best illustration of how this all works. This hourglass model was at first taken as an axiom or religious statement. Back in the earlier days there was no single protocol in the middle. Instead, other protocols such as DECNET, Appletalk, and SNA also vied for the spot, and at times were more popular in enterprise deployments. Now, not only does IP sit in the middle alone, but there is research from the Georgia Institute of Technology that claims that only a single protocol CAN sit in the middle at this point.

O’Dell’s Axiom

The only real problem is scaling.

Mike O’Dell was the routing architect of UUNET, one of the first commercial Internet service providers in the world, and was for a time the routing area director at the IETF. During that time the routing system groaned under the weight of explosive Internet growth. It was by the skin of its teeth that the Internet managed to function as well as it did in 1994. This was around the time that Mike made the above poignant statement. It goes well beyond the Internet, and of course is hyperbolic; but it raises an important design consideration: sometimes things that seem to work well in small systems simply do not scale. The trick is to develop simple systems that do scale. In a way, O’Dell’s Axiom is a restatement of Occam’s Razor.

Mike O’Dell was the routing architect of UUNET, one of the first commercial Internet service providers in the world, and was for a time the routing area director at the IETF. During that time the routing system groaned under the weight of explosive Internet growth. It was by the skin of its teeth that the Internet managed to function as well as it did in 1994. This was around the time that Mike made the above poignant statement. It goes well beyond the Internet, and of course is hyperbolic; but it raises an important design consideration: sometimes things that seem to work well in small systems simply do not scale. The trick is to develop simple systems that do scale. In a way, O’Dell’s Axiom is a restatement of Occam’s Razor.

Rekhter’s Law

Addressing can follow topology, or topology can follow addressing. Choose one.

Devices in the middle of the Internet route packets based on the destination address in the IP header. There are over 4 billion IP version 4 addresses and 1038 version 6 addresses. No device can know how to get to them all, as there are simply too many. And so the information is aggregated in the address with the addition of a small bit of information known as a prefix length. I might have a route to the first 16 bits of address 128.6.4.4, but I don’t know precisely where at Rutgers that machine is. It’s the same as the postal system. The postal service in Singapore is not likely to know where 420 California St. is in Palo Alto, California, but can route a package to either California or the United States. The key is that to route the package to California, there Singapore must have explicit routing information (e.g., put that package on Flight 23 to Los Angeles). Yakov Rekhter made the point earlier, that either the way the network is laid out is based on the address itself, or the address must be organized based on the way the the network is laid out, but you can’t have it both ways. The advantage of having more information in the topology is that there can be more than one route explained to get to a location. The disadvantage is that you have to store and process all that information.

Devices in the middle of the Internet route packets based on the destination address in the IP header. There are over 4 billion IP version 4 addresses and 1038 version 6 addresses. No device can know how to get to them all, as there are simply too many. And so the information is aggregated in the address with the addition of a small bit of information known as a prefix length. I might have a route to the first 16 bits of address 128.6.4.4, but I don’t know precisely where at Rutgers that machine is. It’s the same as the postal system. The postal service in Singapore is not likely to know where 420 California St. is in Palo Alto, California, but can route a package to either California or the United States. The key is that to route the package to California, there Singapore must have explicit routing information (e.g., put that package on Flight 23 to Los Angeles). Yakov Rekhter made the point earlier, that either the way the network is laid out is based on the address itself, or the address must be organized based on the way the the network is laid out, but you can’t have it both ways. The advantage of having more information in the topology is that there can be more than one route explained to get to a location. The disadvantage is that you have to store and process all that information.

Wheeler’s Fundamental Theorem of Software Engineering

Any problem in computer science can be solved with another level of indirection.

This isn’t really a theorem but more of a joke that happens to be a general approach to solving computer science problems. The classic example of this is a fully normalized database where one has but two key value pairs per table, and one must fly through many levels of indirection to put together any sort of coherent informational view. This having been said, often times our traffic passes through tunnels, be they VPN or MPLS or other forms. IP was designed to easily allow for this, and there is a project called LISP which builds on this principle.

This isn’t really a theorem but more of a joke that happens to be a general approach to solving computer science problems. The classic example of this is a fully normalized database where one has but two key value pairs per table, and one must fly through many levels of indirection to put together any sort of coherent informational view. This having been said, often times our traffic passes through tunnels, be they VPN or MPLS or other forms. IP was designed to easily allow for this, and there is a project called LISP which builds on this principle.

The Clark Provisio to Wheeler’s Statement

… at the cost of performance.

That is, indirection requires additional information, and that information must be stored, transmitted, and processed. It’s not to say it isn’t worth doing at times, but tradeoffs must be examined.

Wilton’s Mistake

I thought that I would just check my email one last time.

Courtesy of Rob Wilton. We’ve all said this, and then regretted it.

The Berners-Lee Declaration

This is for everyone.

And so it is.