When you see a URL like http://www.ofcourseimright.com, your computer needs to convert the domain name “www.ofcourseimright.com” to and IP address like 62.12.173.114. As with everything else on the Internet, there are more or less secure ways of doing this. Even the least secure way is actually pretty hard to attack. While false information is returned by the DNS all the time, usually it’s benign. There are still some reasons to move to a more secure domain name system:

When you see a URL like http://www.ofcourseimright.com, your computer needs to convert the domain name “www.ofcourseimright.com” to and IP address like 62.12.173.114. As with everything else on the Internet, there are more or less secure ways of doing this. Even the least secure way is actually pretty hard to attack. While false information is returned by the DNS all the time, usually it’s benign. There are still some reasons to move to a more secure domain name system:

- Attackers are getting more sophisticated, and they may attack resolvers (the services that change names to numbers). Service providers, hotels, and certain WiFi networks are subject to these sorts of attacks, and they are generally unprepared for them.

- There are a number of applications that could make use of the domain name system in new ways if it was more secure.

Still it’s good that the current system hasn’t been seriously attacked, because the way the Internet Engineering Task Force (IETF) recommends – DNSSEC – is a major pain in the patoot for mere mortals to use. There is some good news: some very smart people have begun to document how to manage All of This®. What’s more, some DNS registrars who manage your domain names for you will, for a price, secure your domain name. However, doing so truly hands the registrar the keys to the castle. And so what follows is my adventure into securing a domain name.

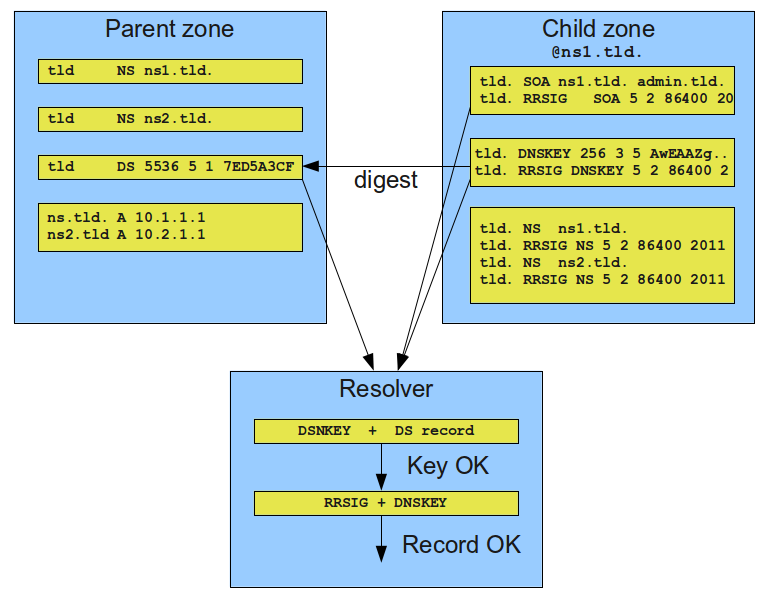

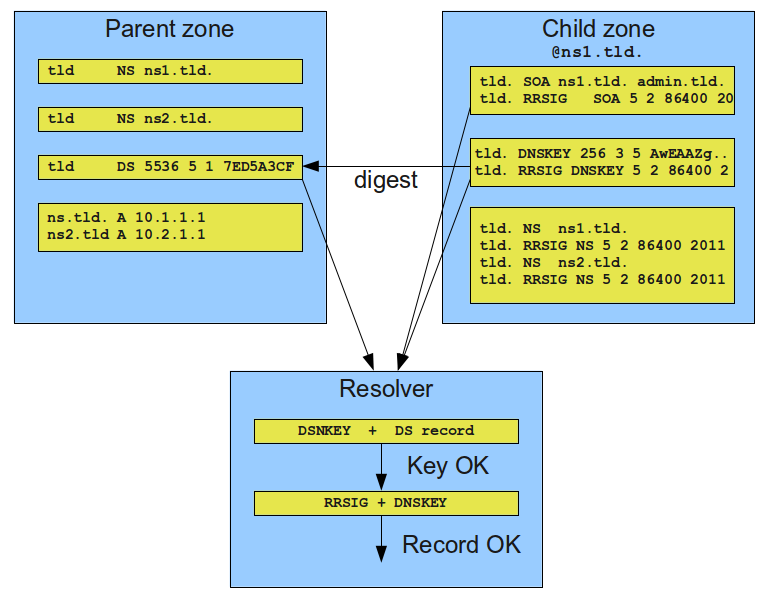

DNSSEC is a fairly complex beast, and this article is not going to explain it all. The moving parts to consider are how the zone signs the information, how the information is authorized (in this case the parent zone), and how the resolver validates what it is receiving. It is important to remember that for any such system there must be a chain of of trust between the publisher and the consumer for the consumer to reasonably believe what the publisher is saying. DNS accomplishes this by having a hash of the signed record for a zone in its parent zone. That way you know that somehow the parent (like .com) has reason to believe that information signed with a particular key belongs to the child.

From the child zone perspective (e.g., ofcourseimright.com), there are roughly four steps to securing a domain with DNSSEC:

- Generate zone signing key pairs (ZSKs). These keys will be used to sign and validate each record in the zone.

- Generate key signing key pairs (KSKs). These keys are used to sign and validate the zone signing keys. They are known in the literature as the Secure Entry Point (SEP) because there aren’t enough acronyms in your life.

- Sign the zone.

- Generate a hash of the DNSKEY records for the KSKs in the form of a DS record.

- Publish the DS in the parent zone. This provides the means for anyone to confirm which keys belong to your zone.

Steps one through four are generally pretty easy when viewed in a single instance. The oldest and most widely used name server package, BIND, provides the tools to do this, although the instructions are not what I would consider to be straight forward.

Step five, however, is quite the pain. To start with, you must find a registrar who will take your DS record. There are very few that allow this at all. For “.com” I have found only two. Furthermore, the means of accepting those records is far from standardized. For instance, at least one registrar insists that DS records be stored in the child zone. They are only listed in the parent zone once you’ve used the web interface and selected one of those that can be found. Another registrar requires that you enter the DS record information in a web interface. It turns out this isn’t perfect either. For one thing, it’s error prone, particularly as relates to the validity duration of a signature.

This brings us to the real problem with DNSSEC: both ZSKs and KSKs have expiration dates. This is based on the well established security notion that with enough computation power, any key can be broken in some period of time. But this also means that one has to not only repeat steps one through five periodically, but one must do so in such a way that observes the underlying caching semantics of the domain name system. And this is where mere mortals have run away. I know. I ran away some time ago.

A tool to manage keying (and rekeying)

But now I’m trying again, thanks to several key developments, the first of which is a new tool called OpenDNSSEC. OpenDNSSEC takes as input a zone file, writes as output the signed zone, and will rotate keys on a configured schedule. The tool can also generate output that can be fed to other tools to update parent zones, such as “.com”, and it can manage multiple domains. I manage about six of them myself.

The tool is not entirely “fire and forget”. To start with, the tool has a substantial number of dependencies, none of which I would call showstoppers, but do take some effort by someone who knows something about installing UNIX software. For another, as I mentioned, some registrars require that DS records be in the child zone, and OpenDNSSEC doesn’t do this. That’s a particular pain in the butt because it means you must globally configure the system to not increment the serial number in the SOA record for a zone, then append the DS records to the zone, and then reconfigure OpenDNSSEC to then increment the serial number again. All of this is possible, but annoying. Two good solutions to this would be to either modify OpenDNSSEC or change registrars. The latter is only an option for certain top level domains.

Choosing a Registrar

To make OpenDNSSEC most useful one needss to choose a registrar that allows you to import DS records and also has a programmatic interface, so that OpenDNSSEC can call out to it when doing KSK rotations. In my investigations, I found such an organization in GKG.NET. These fine people provide a RESTful interface to manage DS records, that includes adding, deleting, listing, and retrieving key information. It’s really just what the doctor ordered. There are other registrars that have various forms of programmatic interfaces, but not so much for the US three-letter TLDs.

The glue

Now this just leaves the glue between OpenDNSSEC and GKG.NET. What is needed: a library to parse JSON, another to manage HTTP requests, and a whole lot of error handling. These requirements aren’t that significant, and so one can pick one’s language. Mine was Perl, and it’s taken about 236 lines (that’s probably 300 in PHP, 400 in Java, and 1,800 in C).

So what to do?

If you want to secure your domain name and you don’t mind your registrar holding onto your keys and managing your domain, then just let them do it. It is by far the easiest approach. But tools like OpenDNSSEC and registrars like GKG are definitely improving the situation for those who want to hold the keys themselves. One lingering concern I have about all of this is all the moving parts. Security isn’t simply about cryptographic assurance. It’s also about how many things can go wrong, and how many points of attack there are. All of this proves is that while DNSSEC itself can in theory make names secure, in practice, even though the system has been around for a good few years, the dizzying amount of technical knowledge to keep the system functional is a substantial barrier. And there will assuredly be bugs found in just about all the software I mentioned, including Perl, Ruby, SQLite, LDNS, libxml2, and of course the code I wrote. This level of complexity is something that should be further considered, if we really want people to secure their name to address bindings.

If you want to secure your domain name and you don’t mind your registrar holding onto your keys and managing your domain, then just let them do it. It is by far the easiest approach. But tools like OpenDNSSEC and registrars like GKG are definitely improving the situation for those who want to hold the keys themselves. One lingering concern I have about all of this is all the moving parts. Security isn’t simply about cryptographic assurance. It’s also about how many things can go wrong, and how many points of attack there are. All of this proves is that while DNSSEC itself can in theory make names secure, in practice, even though the system has been around for a good few years, the dizzying amount of technical knowledge to keep the system functional is a substantial barrier. And there will assuredly be bugs found in just about all the software I mentioned, including Perl, Ruby, SQLite, LDNS, libxml2, and of course the code I wrote. This level of complexity is something that should be further considered, if we really want people to secure their name to address bindings.

Over the last few weeks a number of stories have appeared about new “wearable” technology that has the means to track you and your children.

Over the last few weeks a number of stories have appeared about new “wearable” technology that has the means to track you and your children.  MagicBand.

MagicBand.  When you see a URL like http://www.ofcourseimright.com, your computer needs to convert the domain name “www.ofcourseimright.com” to and IP address like 62.12.173.114. As with everything else on the Internet, there are more or less secure ways of doing this. Even the least secure way is actually pretty hard to attack. While false information is returned by the DNS all the time, usually it’s benign. There are still some reasons to move to a more secure domain name system:

When you see a URL like http://www.ofcourseimright.com, your computer needs to convert the domain name “www.ofcourseimright.com” to and IP address like 62.12.173.114. As with everything else on the Internet, there are more or less secure ways of doing this. Even the least secure way is actually pretty hard to attack. While false information is returned by the DNS all the time, usually it’s benign. There are still some reasons to move to a more secure domain name system:

If you want to secure your domain name and you don’t mind your registrar holding onto your keys and managing your domain, then just let them do it. It is by far the easiest approach. But tools like OpenDNSSEC and registrars like GKG are definitely improving the situation for those who want to hold the keys themselves. One lingering concern I have about all of this is all the moving parts. Security isn’t simply about cryptographic assurance. It’s also about how many things can go wrong, and how many points of attack there are. All of this proves is that while DNSSEC itself can in theory make names secure, in practice, even though the system has been around for a good few years, the dizzying amount of technical knowledge to keep the system functional is a substantial barrier. And there will assuredly be bugs found in just about all the software I mentioned, including Perl, Ruby, SQLite, LDNS, libxml2, and of course the code I wrote. This level of complexity is something that should be further considered, if we really want people to secure their name to address bindings.

If you want to secure your domain name and you don’t mind your registrar holding onto your keys and managing your domain, then just let them do it. It is by far the easiest approach. But tools like OpenDNSSEC and registrars like GKG are definitely improving the situation for those who want to hold the keys themselves. One lingering concern I have about all of this is all the moving parts. Security isn’t simply about cryptographic assurance. It’s also about how many things can go wrong, and how many points of attack there are. All of this proves is that while DNSSEC itself can in theory make names secure, in practice, even though the system has been around for a good few years, the dizzying amount of technical knowledge to keep the system functional is a substantial barrier. And there will assuredly be bugs found in just about all the software I mentioned, including Perl, Ruby, SQLite, LDNS, libxml2, and of course the code I wrote. This level of complexity is something that should be further considered, if we really want people to secure their name to address bindings.